As what we

discussed in the previous post, throughout my experience as a Data Analytics

consultant, I am required perform estimation for almost all of the projects which

I ever worked on. And Sampling is indeed

one of the most commonly used analytics way since most people do adopt it a set

of proper samples do represent for a unknown or very large population.

Although

sampling is being widely used, it appears that many people today are still

determining the sample size by purely personal professional judgement, in other

words, guts feeling. In fact, there exist many ways to determine the sample in

a more scientific and systematical approach where a more proper sampling size

would definitely allow your analysis become more justifiable and defensible.

Here we

start our calculation with the “Margin of Error”: -

Depends

on the project nature, we would then need to determine our precision level

(i.e. Margin of Error) which we would normally set to 5% or 10%.

Subsequently,

our confidence level would then be 95% which means, in normal circumstances, we

are 95% certain that our samples be able to represent the whole

population.

At

confidence level 95%, the area to the right of a z-score becomes 1 – 0.5 / 2 =

0.975. Therefore the z-score is 1.96.

Substituting

all the above into the equation, we would then determine that 385 samples is the right no. of samples

that we have to pick from our unknown or very-large population.

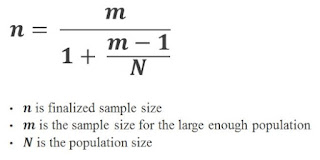

And

for a small and finite population, trying to keep it simple, I am not going to elaborate

this in details here. However, we could still simple apply the below formula with the sample size identified above for a known or finite population

size.

Example,

assume the population we know is 10,000 items.

Applying the formula, we would then determine that 371 samples (instead of 385 samples) is the right no. of samples

that we have to pick from our data with population size equal to 10,000 items.

Hope the

above would give you a high level understanding of how to determine your simple

size so that no more “professional judgement” is required when you are required

to provide estimation or justification with the method of sampling. If you have

found any errors in the above or have any thoughts or interest on this topic,

please feel free to drop me a note.

Thanks.